One API for Any Model

Access all major models through a single, unified interface. OpenAI SDK works out of the box.

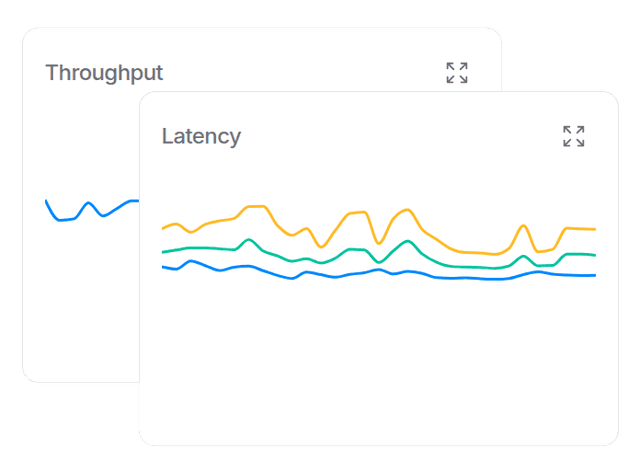

Higher Availability

Reliable AI models via our distributed infrastructure. Fall back to other providers when one goes down.

Price and Performance

Keep costs in check without sacrificing speed. OpenRouter runs at the edge for minimal latency between your users and their inference.

Custom Data Policies

Protect your organization with fine grained data policies. Ensure prompts only go to the models and providers you trust.

Featured Agents

250k+ apps using OpenRouter with 4.2M+ users globally

Signup

Create an account to get started. You can set up an org for your team later.

Buy credits

Credits can be used with any model or provider.

Get your API key

Create an API key and start making requests. Fully OpenAI compatible.

Explore Models

Discover AI models across our collection, from all major labs and providers.

Model & App Rankings

Explore token usage across models, labs, and public applications.